Code and Game Design

I’ve been exploring the world of programming since the age of 10, and haven’t stopped since! I majored in Computer Science and minored in Video Game Development at Heidelberg University, and have additionally taught myself what I could through an assortment of personal projects. Listed below are some highlights from my past work both in and out of school.

Click here to visit my GitHub page.Completed Projects

-

Fractured

A puzzle-platformer made for SkillsUSA 2019.

-

havenspace

A web-based musical piece with interactive elements.

-

GLYPH

An AR-powered music installation that runs on mobile devices.

-

The Ballad of Lugh

A platformer game made in 96 hours, which won Jame Gam #32.

Fractured

During my junior and senior years of high school, I was a part of Mayfield's Excel TECC program, studying Information Technology and Programming. I would be in a classroom for a few hours each day studying computer science topics, and during my second year of the program I was specifically studying game development with Unity. As part of the course, I was part of a two-person team that was developing a game for the SkillsUSA 2019 Ohio State conference. The resulting game was called "Fractured".

The game is a puzzle-platformer developed in Unity, which centers around the core mechanic of moving pieces of the level around as you go. In the team, I focused on developing the code and assets for the game, while my teammate worked more on the associated presentation for the conference. We ended up earning second place at the competition!

Click here to play Fractured on itch.io.Return to top

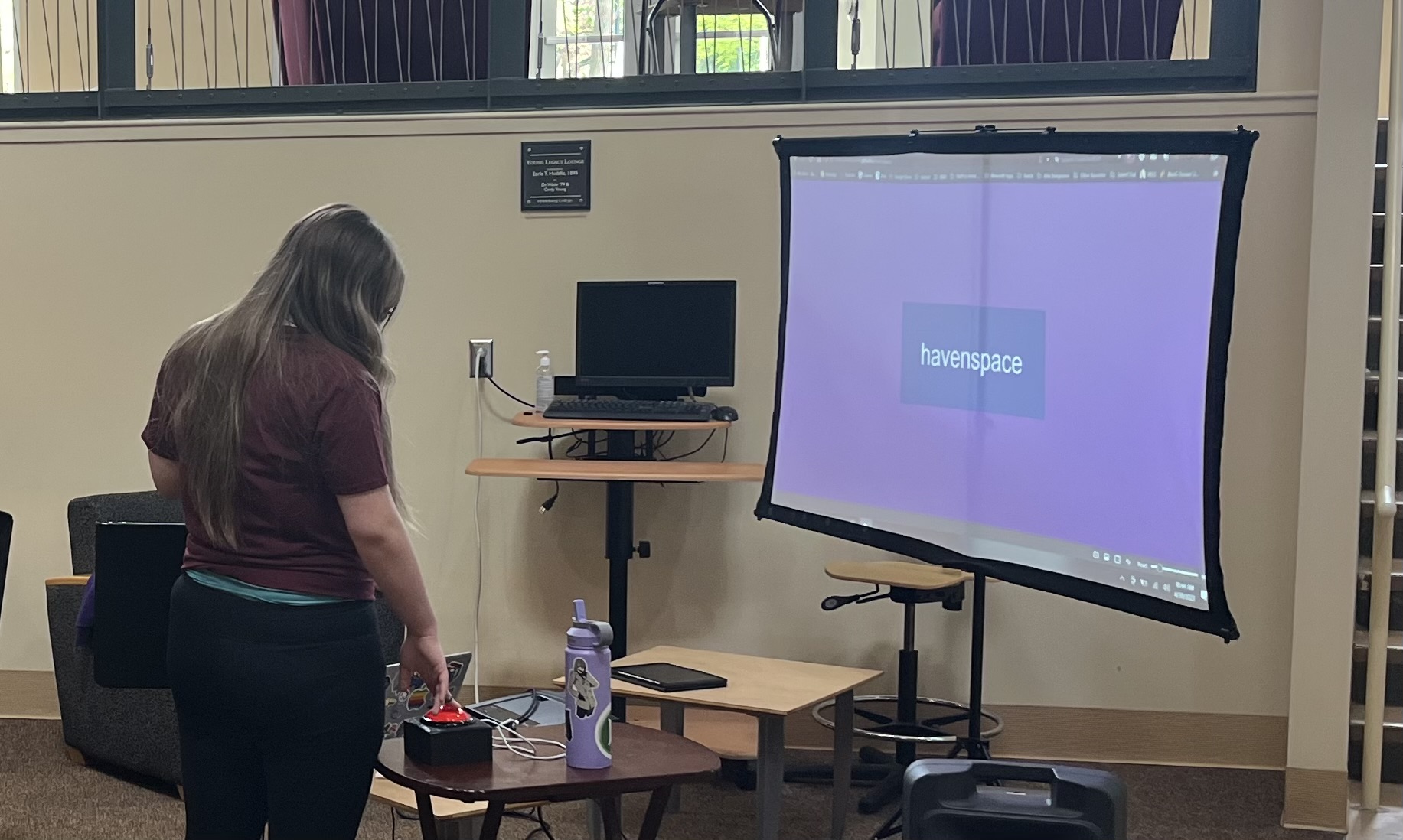

havenspace

havenspace is an interactive musical piece which runs in a web browser. It consists of a simple webpage built from HTML and CSS, and a TypeScript-based backend. The song works by loading a series of MP3 files using Tone.js, and moving through the song using a state machine. At each state, the code determines how pressing the button affects the music, and at what point to trigger the next state.

When I initally debuted the piece, I had a physical button that audience members could press. The actual button was from Adafruit, and I 3D printed a case to mount it in. Then, I connected the button to an Arduino board, and sent serial messages to my laptop over USB. An AutoHotKey script caught those messages, and converted them into spacebar presses, which the website was able to recognize. It was a complicated system, but it ended up working quite well! For more info about the song, visit my Music page here, or view the source code here.

Click here to access havenspace.Return to top

GLYPH

Much like with havenspace, I really enjoy combining music with code when I'm able to. GLYPH was another experiment within that realm, this time with the concept of using the audience's mobile devices to create an interactive music installation. To do this, I created a website that uses augmented reality to detect when the camera is pointed at one of several markers. When pointing at a marker, the phone will produce a sound corresponding to that specific marker. Then, once there are many people in a single room looking at different markers, the entire song becomes audible.

During development, I split the project into three main systems:

- AR: The AR functionality is handled by the ar.js and three.js libraries. Each of the titular glyphs are 2D barcodes, which are especially easy to use for AR purposes. Once the site finds a barcode, it notes where it is and renders a few shapes over it as visual feedback.

- Audio: Once a barcode is in view, it's time to produce some sort of sound. This is done using Tone.js synthesizers and effects. A set of instruments is defined in the code, and a synth is selected based on which barcode is currently in view.

- Note Generation: Finally, we have to decide which notes the instrument is going to play. Additionally, it would be ideal for all participants to be playing the same notes, because otherwise it'll just sound like a random mess. I found the simplest way to do this was with seeded random functions, using the system clock as the seed. While not infallable, it works in most use cases. Every 32 seconds, the system picks a random key and scale. Then each instrument is updated once per second, where it decides if it's going to play a note, which note it's going to play, and when it'll play it. Using inheritance, I was also able to give each instrument slightly varied behaviors. The result is that although the song is randomly generated, there is still a recognizable melody and progression between all of the participants.

If you'd like to look at the source code, you can find it here.

Return to top

The Ballad of Lugh

The Ballad of Lugh is an action-platformer game developed in 96 hours, and the winner of Jame Gam #32, which had the theme "Folklore and mythology", the special object "Eye", and 31 total entrants. To fit with the theme, I based my game on the Irish mythological characters of Lugh and Balor. I also decided to use an art style inspired by storybooks and caligraphy, as it was both thematic and quick to produce. Most of my past game development experience has been in Unity, but for this game I decided to try using Godot 4, an open source game engine.

I gave myself the additional challenge of writing as much code from scratch as I possibly could, not using any pre-existing script assets like level managers or player controllers. It was a lot of work, but I found that a lot of my skills from Unity transferred over, and I'm very happy with how the game turned out.

Click here to play The Ballad of Lugh on itch.io.Return to top